Network Rail:

A Legacy of Innovation

At Oakland, our collaboration with Network Rail stands as a testament to our commitment to driving innovation through technology. Working closely with our client, we embarked on a mission to tackle challenges in knowledge management and harness the potential of AI and natural language processing.

In this case study, we look back on our journey alongside Network Rail and how we effectively harnessed our expertise in data strategy, artificial intelligence (AI), and integration to deliver an effective, long-term solution.

The Lessons Learnt Process

Network Rail is one of the UK’s biggest investors in infrastructure projects. It covers 20,000 miles of track and 30,000 bridges, tunnels, and viaducts.

Working in five-year funding cycles called control periods (CPs), they are just entering CP7, the five-year period (2024- 2029), during which Network Rail will invest £44 billion in the UK with a commitment to new technologies and data analytics.

Network Rail has a vast and valuable library of lessons learned from various infrastructure projects stored in SharePoint. Where data is of good quality, Network Rail is continuously improving the way information is made available for its employees to consume.

Network Rail’s Rail Infrastructure Centre of Excellence (RICOE) manages the company’s knowledge base. They realised the information was valuable and wanted to make it even more actionable. Their goal was to encourage employees to actively use the information to make improvements and drive positive change.

Being able to properly adopt and action knowledge locked away within these lessons presented a huge opportunity for efficiencies from a cost and process perspective. Given the huge scope, number of projects and financial commitment from Network Rail at any one time, the potential benefits of acting on these lessons were massive. But it required a solution that could automate outputs and link the learning to tangible actions.

The Use Case for Generative AI

The existing SharePoint site posed some challenges:

Volume of data

The sheer volume of data made it difficult to consume and users struggled to find the relevant information, resulting in fewer people using the existing SharePoint site.

Accessibility

The current site provided a knowledge management solution much like any other SharePoint system. More work could be done to further improve the way useful lessons were accessed and utilised.

If it’s not immediately there, we give up

The information was contained in a variety of formats, from videos to PDFs and PowerPoints. Having useful information that extra click or page away sometimes meant users stopped looking before they found what they needed.

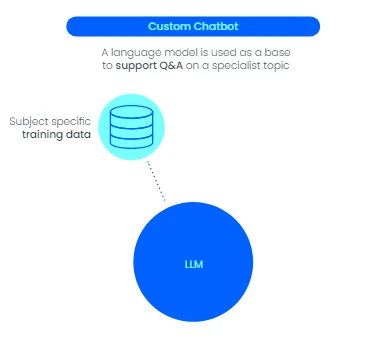

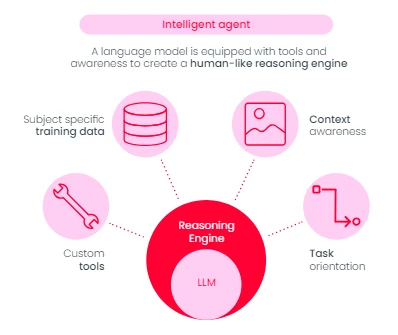

With knowledge management being a priority, it was clear that an innovative approach to Generative AI could help solve some of Network Rail’s issues. Artificial Intelligence can facilitate more involved and automated analysis of textual data in ways that other approaches can not – so it was the natural preferred approach. Furthermore, the situation was a textbook use case candidate for Generative AI and suited the method perfectly.

Drawing on Oakland’s decades of process, data, software, and integration expertise, the Network Rail knowledge management team selected Oakland to design and deliver a cutting-edge Generative AI application.

What were the project goals?

From multiple discussions around requirements, the following goals were confirmed for the project:

- Information from the learning from lessons library needs to be easier to find and consume for users.

- Users must be able to ask natural language questions of the chatbot and get back sensible answers, for example, “We are building a bridge. What should I know?”

- Responses must have the relevant citation to ensure the data is accurate.

- The solution must utilise a secure, standard technology approach (Azure AI Services were settled upon).

- The solution must consider accessibility options (i.e. consider the use of different languages, input methods and response styles).

The project sought to modernise, develop and improve the way knowledge was managed and shared within the organisation. There was a focus on improving the speed to help Network Rail quickly access internal knowledge resources such as lessons, procedures, and documentation. With the ability to integrate external data sources in the future. Streamlining internal workflows, improving productivity, and reducing the time spent searching for information.

How did we implement the Learning from Lessons Library (LFLL) AI project?

The project so far has been delivered over multiple phases:

Proof of Concept (PoC)

Oakland initially created a successful proof of concept by developing an Azure based AI solution in a short space of time. This solution processed the extracted data from the lessons at source within existing SharePoint site and integrated these with a large language model. The proof of concept was a rapid deployment undertaken in 4-5 weeks to demonstrate functionality and value in a small time frame. The chatbot was available for initial queries of the language model after just 2 weeks.

Phase 2

The focus was to take the work from the PoC phase and formalise the solution, adding various functionality and consideration across a number of areas to create a more production-ready tool. The 9-week phase saw extra development of the cloud infrastructure, enhancing security, setting up pipelines for different filetypes and completing work that would streamline deployment in Network Rail’s environment in a future phase. Work was also done to investigate further data sources and also to speak to potential users about how the knowledge management process was currently completed to inform the future method.

Phase 3

This phase aims to deploy the solution built with the Network Rail Azure environment as a production solution. Cloud resources would be configured and deployed, before being tested in line with NR IT governance processes – ready for production.

What was our thought process behind the technology options?

The technology for the LFLL AI project was selected to meet the requirements, scalability potential, and secure data handling. Importantly creating a solution that could be managed with the existing technology stack within Network Rail.

Azure’s OpenAI resources offered:

Simplified integration

A simple and easy-to-use API offers various endpoints that can be used for different tasks, such as text generation, summarisation, sentiment analysis, language translation, and more.

Pre-trained models

Already fine-tuned on vast amounts of data, these pretrained models make it easier to leverage the power of AI without having to train models from scratch.

Customisation

Pre-trained models provide an opportunity to create personalised AI applications with minimal coding.

Documentation and resources

Azure OpenAI Service provides comprehensive documentation and resources.

Scalability and reliability

Hosted on Microsoft Azure, the service provides robust scalability and reliability that developers can leverage to deploy their AI applications with confidence, without having to worry about managing the underlying infrastructure.

Responsible AI

Azure OpenAI Service promotes responsible AI by adhering to ethical principles, providing explainability tools, governance features, diversity and inclusion support, and collaboration opportunities. These measures help ensure that AI models are unbiased, explainable, trustworthy, and used in a responsible and compliant manner.

Environmentally sustainable services

Microsoft have committed to being Carbon negative by 2030 and are well along this journey as of 2024. They have also created a $1Bn Climate Innovation Fund to accelerate the global development of carbon reduction, capture, and removal technologies.

Ultimately, the technology for the AI solution was chosen to be scalable, secure, and high-performing, meeting project needs whilst offering a great user experience for stakeholders.

How was the project delivered?

The Network Rail LFLL’s successful delivery resulted from a collaborative effort between the Network Rail Knowledge Management team and Oakland.

Agile Planning

The project followed a main delivery plan but was carried out using an Agile method, where we:

- Planned in 2-week sprints with specific tasks that followed the overall plan.

- Finished each cycle with a demonstration to show the work completed.

- Regularly reviewed progress to learn and identify areas to improve.

We developed the solution iteratively and to an evolving plan, notifying Network Rail and discussing any changes throughout the 2-week sprints.

Technical Solution

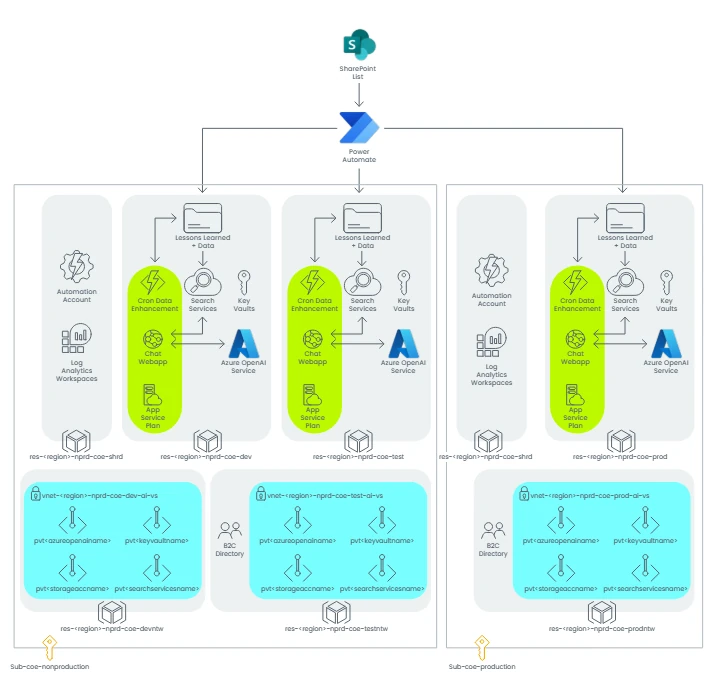

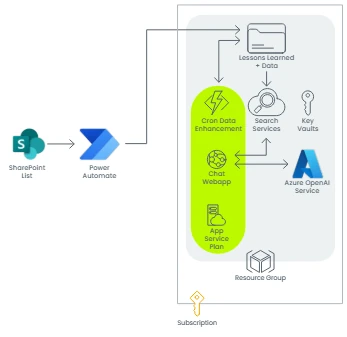

Overall Architecture Diagram

Core Component Architecture

Consideration was made on the technical approach selected for the main solution. Azure AI Services were settled upon given the need to develop a solution which adhered to robust governance processes and that the services themselves provided the outputs needed to meet and exceed requirements.

The solution consists of a SharePoint list (the source of the lessons library) being ingested by PowerAutomate into an Azure Storage Container. The lesson data is processed into separate text files in order to prepare it for subsequent use in Azure AI Services. In conjunction, with this images and document attachments are processed to extract key textual elements.

Once all of the text is collated, this is passed to Azure AI Search for the generation of embeddings and the creation of an index.

Users interact with a single page web application to engage with the chatbot and are presented with suggested prompts to test the chatbot with. The web app utilises the Azure Open AI service using the ChatGPT 3.5 turbo model. This model was selected as it provided the best combination of price and performance for the required use case.

There are three environments for the solution to align with NR’s Software Development Lifecycle and best practices. These consist of Development, Test and Production.

Presently the solution is hosted in the Oakland Tenant and access to Network Rail is provided securely via an Azure B2C directory. The next proposed phase of delivery would include us taking the solution through Network Rail’s IT Design Board and surrounding governance procedures in order to deploy the solution in Network Rail’s Azure tenant.

The benefits of the Lessons Library (LFLL)

Generative AI is a new technology, moving at speed and currently the number of use cases is small. Network Rail’s implementation of this technology is market-leading and offers limitless possibilities in the future. In the here and now, the LFLL has created a number of benefits for Network Rail:

Completely customisable

The tone, level of detail, language, format and much more are able to be tailored to provide a response in the way a user would like.

Improved efficiency

By using a chatbot interface with a large language model, lessons learned can be understood and adopted far quicker than before.

Broader data sources

The language model is developed based on the various attachments and files associated with the lessons. This means the data being returned is more accurate and useful than ever before.

Improving knowledge management:

The new language model gives users comprehensive, real time reporting for data-driven insights and strategic decision making. As an example, Network Rail has over 1,900 acronyms – it is now much easier for everyone to identify, and understand what they stand for.

Summary

We hope you found this AI case study useful.

The project has leveraged the power of Generative AI to create a cloud-based AI model that can be searched using natural language. This model is the engine behind an interactive chatbot that utilises the content currently stored in a SharePoint library containing thousands of valuable lessons learned.

The Lessons Library (LFLL) is a fantastic example of Network Rail’s use of cutting-edge technology to improve efficiency.

It is important to point out the high quality of data played an important part in the success of this type of project. Many large organisations struggle with knowledge management, and it is testimony to Network Rail that Oakland was able to build this LLM in a relatively short timescale.

This is a hugely exciting project in a fast-moving field with very limited use cases currently available. The potential for this technology in the future is only limited by imagination.

Network Rail are committed to delivering a reliable, efficient and sustainable rail service. With continued teamwork between Network Rail, and Oakland, Network Rail can propel itself towards a more digitised, efficient, and innovative future.

If you would like to talk about any elements of this case study, please drop us a line at hello@weareoakland.com

Network Rail saw Knowledge Management as a prime candidate for AI. By placing AI at the heart of the solution, they can unlock a wealth of possibilities to make a real, positive impact for years to come. This is a gamechanger for sharing and leveraging knowledge across the organisation.

Jack Evans – Principal Consultant