In this third article of our Project Analytics series, we expand on the team roles we introduced in our last article by explaining how everything comes together to build the data engineering requirements of a Project Analytics initiative.

Getting to grips with the reality of project data

If you’re focused on delivering Project Analytics, sooner or later, you will have to address both the data availability and project processes.

When it comes to data, we’re all familiar with the concept of ‘rubbish-in, rubbish-out’.

In an ideal world, you would have a single repository of clean data, but within the project world, this ‘single version of the truth’ remains elusive for a variety of reasons:

- Lack of consistent technology, data standards, and processes within organisations

- Fragmented supply chains across suppliers and partners

- Different requirements between customers and stakeholders

- Lack of cross-referencing between systems means they can’t be joined-up

Issues like these create a culture where there is a begrudging acceptance that the data will need to be manually joined up before it can be analysed.

Once you start manipulating the data, you soon notice how bad the data quality is, which requires even more manual effort to get it cleaned before it can be joined up ready for analytical processing.

Building your Single View of Project Data Analytics

When building a single view of the data, you don’t always need to move all your data into one system, but it does need to be feasible to link up data (via cross-referenced identifiers) from across multiple systems.

For many organisations, the ‘big corporate’ approach is often to invest in a single analytics tool and implement a ‘big bang’ initiative that builds out the analytics capability in one push. In theory, this is great when it works, but from our experience, these ‘big bang’ approaches are often:

- Expensive and slow to deliver, hindering tactical exploitation of the here and now

- Difficult to pin down requirements before project kick-off

- Prone to a high failure rate

- Impacted by an ‘imagination gap‘ between the project analytics strategists and those who understand the data at a granular level

We recommend a more incremental (Agile) approach so that you can get stakeholders excited and moving quickly whilst de-risking the project by only delivering what you need at each phase.

From a technical perspective, we’ve found the Agile approach to be flexible enough to align with the changing landscape of the business. By building incrementally, you get to deliver immediate value whilst contributing to any longer-term ‘supertanker‘ data and technology programs coming down the line.

To create an Agile approach in this way requires the type of data team we introduced in the second article of this series, combined with the data engineering strategy you’re going to learn in the rest of this article.

Designing your data engineering platform

Firstly, you’re going to need to know your data, a topic we covered in last week’s article. As discussed, you need to pay close attention to your data processes and any discovered issues.

It helps if you are focused on discovering and resolving smaller problems initially to demonstrate that Project Analytics is achievable and delivers value, so be selective with your battles.

You will need to weigh up the benefits against the risks and consistently illustrate the benefit of Project Analytics, even if stakeholders are not explicitly asking for proof.

Starting from the right point

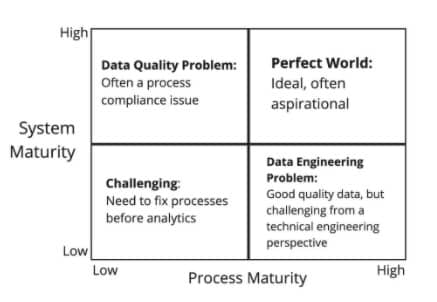

Knowing the maturity of your systems and associated data processes is vital for understanding where to start your Project Analytics journey.

You can use the following grid to help establish your starting point based on your system and data process maturity. Elon Musk may have made the goal of ‘moon shots’ popular – but we find that measured improvements from an agreed starting point make more sense :

Designing your data architecture for the future

Selecting an architecture is not straightforward because you’ll likely have to choose between:

- Adopting a ‘big corporate’ Business Intelligence/Data Analytics platform

- Software vendors who claim their project tool ‘has all the analytics you’ll ever need’ (but is likely to be lacking in key areas)

- Build a flexible data architecture and analytics platform that allows you to ingest data from across internal and external systems, using a suite of tools (right tool for the right job)

Our preferred option is the third approach – creating a flexible architecture. It doesn’t lock you into a particular project analytics/software vendor, and it also allows you to build incrementally.

Whichever option you go for, you must have the relevant expertise on your project to make the right choices.

Sample architecture for building out a Project Analytics platform

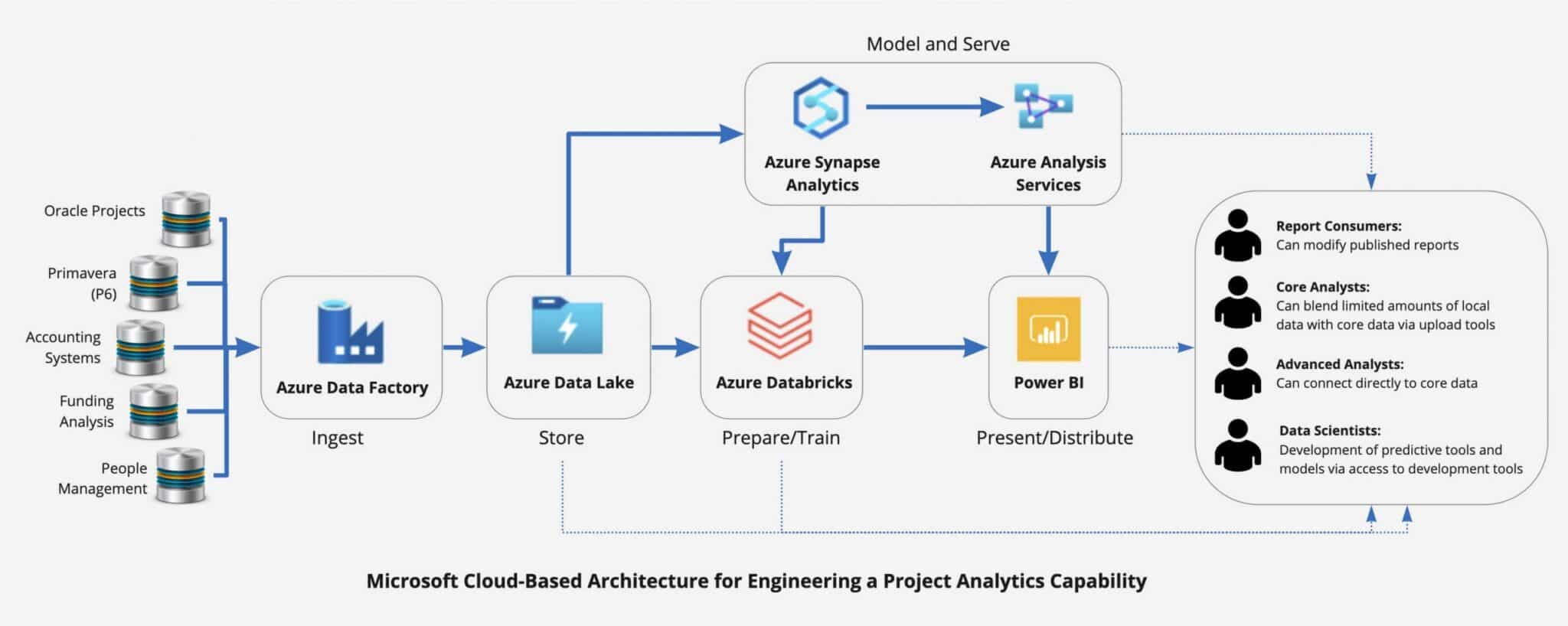

The following diagram is a standard architectural blueprint based on a Microsoft design for ingesting, processing, and presenting your Project Analytics data:

Because it’s a combination of Microsoft and open source technologies, none of it requires proprietary project software, so you’re not tied into any specialist vendors.

Being Microsoft, this architecture also integrates with all the popular data formats, including the most common of all, Microsoft Excel.

We find it offers a flexible and relatively straightforward Project Analytics architecture because:

- It’s future-proof – Microsoft present zero risks of becoming defunct

- IT and technical teams rarely object to it and are comfortable with maintaining the different elements

- If you’re using Microsoft already, it will fit right into your corporate IT strategy and support structures

- Expertise is widely available and easy to find

Please note: Similar architectures are also available for Amazon Web Services (AWS) and Google Cloud if your organisation does not wish to build on Microsoft architecture. Contact us for details of alternative approaches.

The key thing to remember is that these architectures are easy to understand conceptually, but you can get lost if it’s the first time your project team have built this type of architectural design.

Data architectures like this are no different from any other major projects discipline. If you don’t have the knowledge in-house, you should first seek the right level of external expertise, then build up your internal capabilities over time.

For those new to this type of architecture, here is a simplified overview of each component:

- Azure Data Factory: A cloud-based data integration service that allows you to build data pipelines and workflows that ingest, process, and export data from a vast amount of systems and databases.

- Azure Data Lake: A cloud-based repository for housing your Project Analytics information in an accessible, secure and flexible format.

- Azure Data Bricks: An analytical tool that allows you to accomplish anything from simple reporting and presentation, all the way through to machine learning analytics.

- Azure Synapse Analytics/Analysis Services: Think of these as providing a scalable repository for housing cleansed and prepared data ready for the business to analyse, along with the necessary services to let you govern, deploy, test, and deliver the final analytics data.

- Power BI: The extremely popular suite of business intelligence tools for providing reports and detailed analyses securely across the organisation

Considerations for purchasing a separate Project Analytics solution

Some organisations will undoubtedly opt to go down a separate path and procure a different product or tool to deliver their Project Analytics initiative.

If so, be sure that the technology can deliver what the sales literature claims.

You will be changing source/target systems over time, so how will your proposed analytical solution cope, and what are the risks of getting locked into a single vendor?

Architectures such as the one above, and Amazon Web Services (AWS), promote ‘decoupling’ and open configurations that allow improved solutions to be ‘slotted in’ over time to enhance performance, reduce costs, and deliver a better overall customer experience.

Despite the naysayers, it is hard to compete with the benefits provided by modern cloud solutions.

The Project Analytics journey so far, and the next steps

In this series, we started off by examining the evolution of Project Analytics, how it is benefitting the Major Projects sector, and some foundational principles for getting started.

In the second article in this series, we examined the skills required to deliver effective Project Analytics and the steps required when building a case for smarter Project Analytics.

This article explained how to demonstrate the viability of your data analytics platform and explored what’s required to showcase some early benefits.

In the fourth article, you will learn how to extend a Project Analytics pilot into a production-ready solution that is fit for the future.