The move for organisations to better utilise their data has become unanimous across all industry sectors over the past few years. However, starting this journey can be difficult for many companies, who likely haven’t had a defined data strategy from the outset and possess a wide variety of data, ranging in both quality and usefulness. This is further compounded by the general trend of data literature to focus on the shiny end of the data space, ie UBERs new machine vision technology rather than a large manufacturer moving into advanced analytics for the first time.

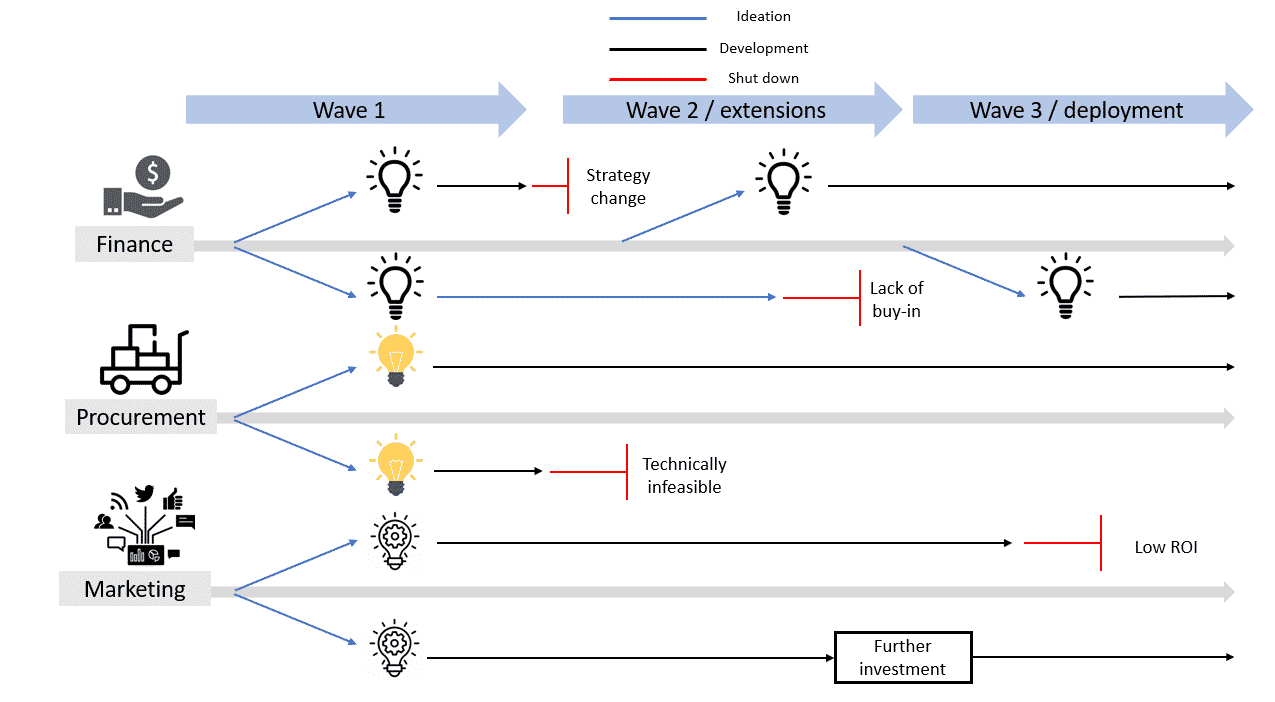

A potential mechanism for understanding where the value in a company’s data may lie is through small scale pilots, encompassing the technical validation of a new idea and a go / no-go decision based on the estimated business value. Using such this approach allows for ideas to be tested quickly and efficiently, removing those from the pool that either show little value to the business, or have no solid technological foundations. This high throughput approach can allow companies to faster realise the value from their data, spinning up several pilots in parallel and quickly pruning off and replacing those that show little merit. However, having an R&D based mindset can be very helpful – where no idea is too precious to remove and people and infrastructure resources are used intensively through a short period.

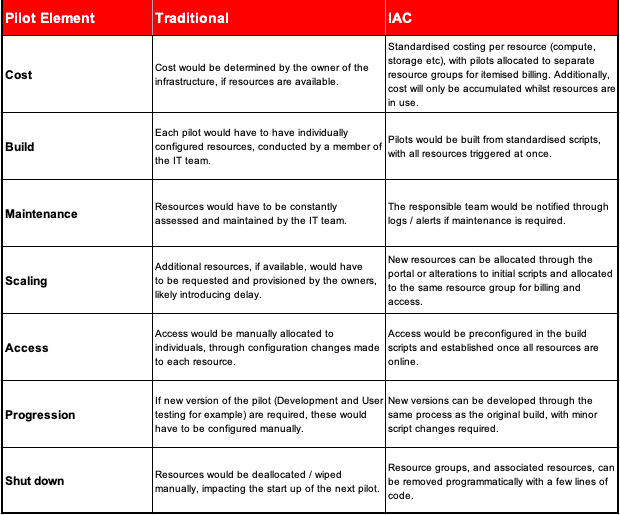

With the latter point in mind, a key factor to making pilots work is by being as efficient as possible with said resources. This has become substantially easier over the last couple of years due to advances made around the Infrastructure as Code (IAC) movement. As a short summary, IAC allows cloud resources to be scripted and then deployed at the click of a few buttons. A good metaphor for this process would be the use of a blueprint to map the elements required for a house, set of room-types and number required, with said house then being rapidly built using ready-made modular components.

So how does the advancement of technology more commonly utilised by technology operations personnel help with developing pilots? Well to best answer this we’ve outlined a few key areas below, comparing IAC to a more traditional methodology of organising resources for multiple parallel projects.

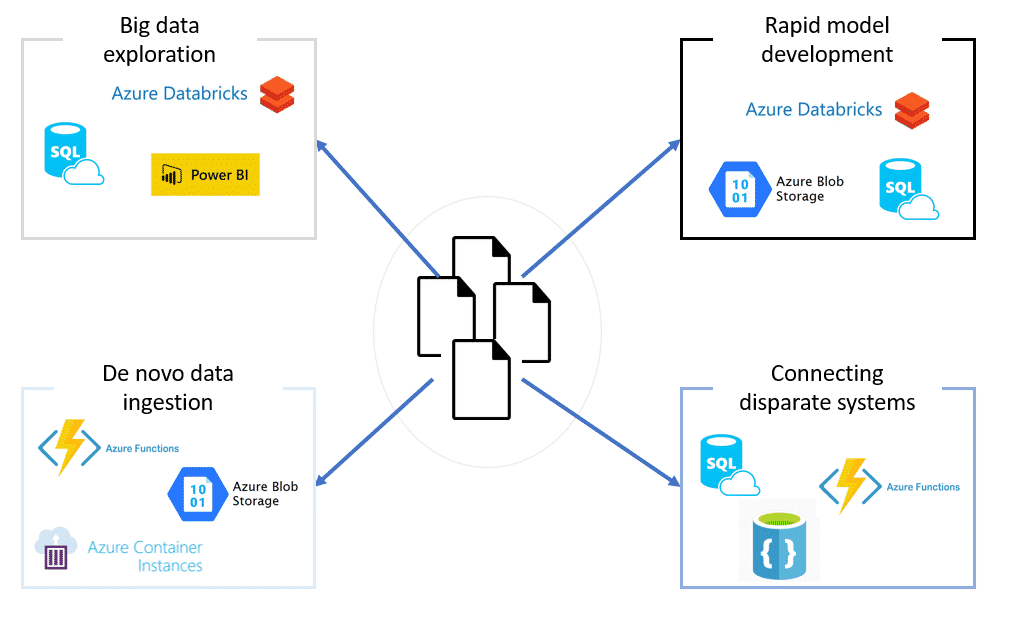

Once the pilots are established, a standardised script would be taken and adjusted, if required, based on each pilots’ specific needs. An example of this would be that all pilots would require a data landing zone, some form of processing and a final database in order to connect to a visualisation engine, for example PowerBI. However, the customer pilot will likely require a processing resource that is suitable for aggregating large data sets, since it will be taking in multiple sources, which could be added into the standard script.

Once the scripts are set, which includes networking, access and security, they are deployed and the analysis work can begin in order to provide an answer to the original question, if possible. As this process continues, it may become evident that the operational pilot is not feasible due to data quality issues and that even though the marketing question has an answer there is little business value in its completion. As such, these two pilots and their associated infrastructure can be decommissioned through a few lines of code, preventing any further spend and allowing the remaining budget to be utilised on either scaling the customer pilot or starting new pilots.

As a final stage to the example, the results of the customer pilot show good business value and as such the decision is made to begin adapting the pilot to be more production ready. This involves scaling a few elements of the original resources to give a more powerful development environment and starting up a user testing environment. This can be achieved via a few steps within the portal, to alter existing resources, and by deploying a slightly modified version of the original infrastructure script to deploy the completely new environment.

Jake Watson is a Data Engineer at The Oakland Group, where he builds environments within the Azure ecosystem for large scale data processing and analysis.