Introduction

Data Platforms are EVERYWHERE but which is right for you? When Gartner names one of its top strategic trends for 2022 as Cloud-Native Platforms you know this isn’t a trend that is here today and gone tomorrow.

By 2025, cloud-native platforms will serve as the foundation for more than 95% of new digital initiatives – up from less than 40% in 2021.

At Oakland we know a thing or two about data platforms. Having built many different variations of them for numerous clients we now have a solid framework for efficiently creating or enhancing your data capabilities.

We have created this guide to provide practical and pragmatic advice so you can see what it takes to deliver a modern data platform, complete with patterns of success to follow and common pitfalls to avoid. Our underlying philosophy is that focus must target the critical data capabilities rather than worry about technologies alone. We’ll come to this later.

It is impossible to deliver technical projects by technology alone. Your data platform must be delivered as part of a wider change programme where people and process are equally as important as the tech.

From our experience each project is completely unique and challenging. If you would like to discuss how you can deliver a successful data platform project, please book a discovery call to find out more.

Part 1: What do we mean by a Data Platform?

Cloud-native platforms are an essential tool to help accelerate the execution of enterprises digitisation plans over the next 2-3 years. They are essential because improved access to cloud services enable the introduction of modern technologies with less operational burden than with legacy systems. They will expedite and facilitate the creation of innovative business solutions.

Adopting cloud-native platforms will provide the primary means for enterprises to execute their digital strategies. Thus enabling business growth, customer retention and efficiency.

Source: Gartner

In very simple terms a data platform enables data access, governance, delivery, and security. It brings together the technology needed to collect, transform, unify and govern the data you need to support users, applications, models and data products.

In today’s world, it also needs to be cost-effective, highly scalable, and have security designed in from the outset. It will also need to consider how you will enable the ability to ingest data from multiple sources (including other data platforms) and be flexible enough to deal with system changes in the future. Your data platform architecture therefore needs to consider what data models you will need to support your business outcomes – but we’ll come onto that later.

The relentless pace of advancements in technology has introduced many solutions that claim to be the latest tool to solve everything. They have their uses, but alone will not be a silver bullet, and your data platform has to keep up.

We believe a successful data platform can only be achieved where cloud capabilities are complemented with an aligned data governance approach. Without this component, too often issues of the past leading to poor data quality, availability, and capitalisation, raise their heads. This leads to both the existing performance of the organisation being impacted and future growth opportunities not being exploited.

Moreover any data platform has to directly target the capabilities an organisation needs, for example, a highly complex organisation with many data sources will need data conformity as a critical capability.

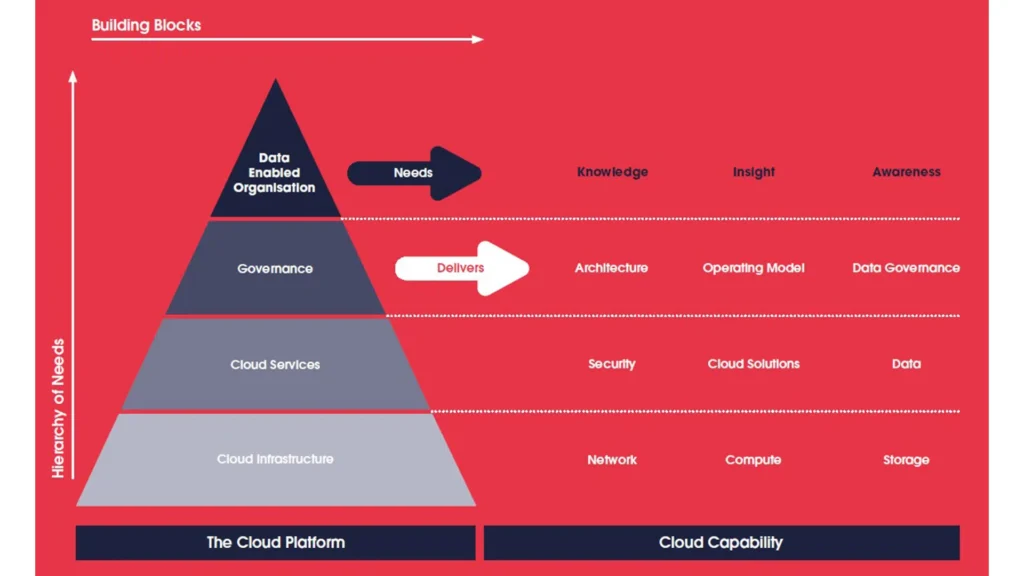

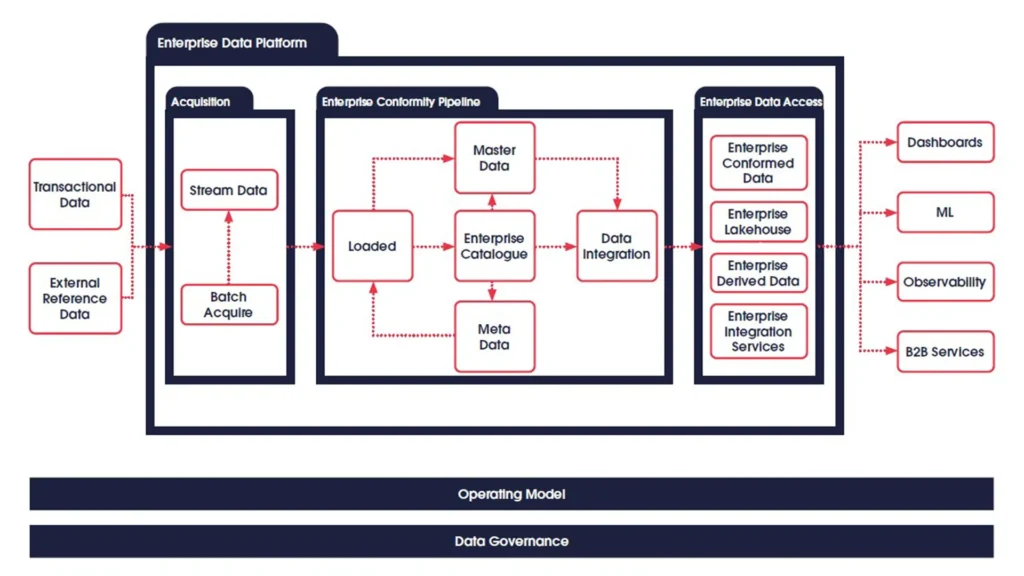

Here at Oakland, we see a data platform as a layered set of capabilities that build on each other to enable organisations to realise value using known quality data managed through governance processes, empowering confidence in data and decision making.

We believe any modern day data platform should be cloud-native.

By this we mean: Cloud-native platforms enable organisations to deliver solutions that are scalable to the enterprise without being heavily reliant on managing the infrastructure that they run on. These platforms are sourced from various public cloud services (e.g. Amazon Web Services, Microsoft Azure, Google Cloud Platform) or can be created utilising software that can structure a fully private cloud environment for added security and control. Cloud-native platforms use core pieces of functionality such as container management, infrastructure-as-code, and serverless functions while allowing for teams to deliver through continuous integration and delivery pipelines. These platforms can work with other cloud tools, SaaS tools, or on-premise applications and offer a speedier alternative to some traditional on-premise solutions.

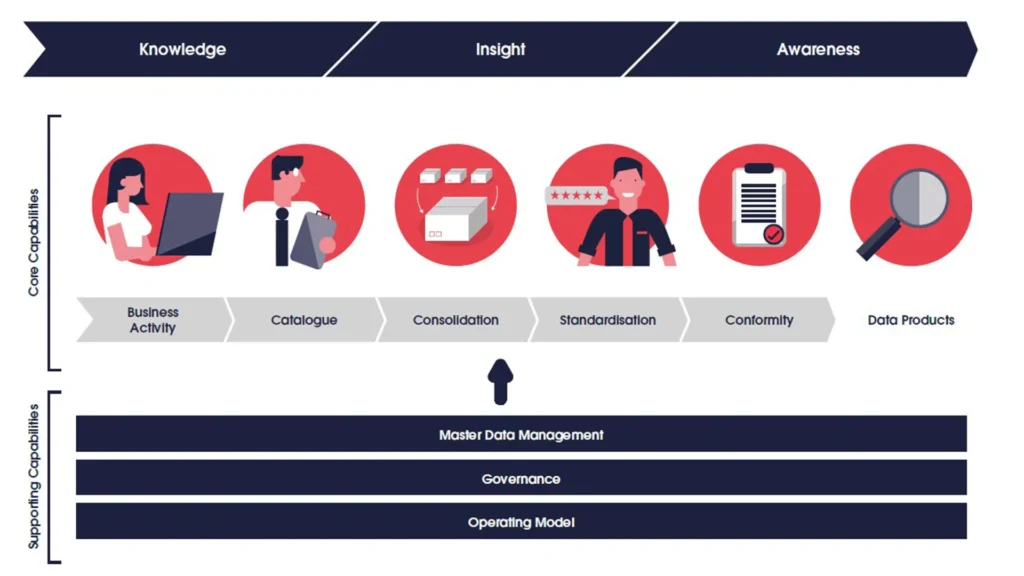

Through provision of all three layers (reference the diagram above) – infrastructure, services and governance – the true value of cloud can be realised by enabling core capabilities (shown in the diagram above, under the themes of Knowledge, Insight and Awareness).

When conceived correctly, consolidation and standardisation of your data should be a critical step to success (this is where MDM can become important – but that’s for another day). Being able to create data which conforms to a standard format, structure or logic, helps to calm and remove all the “noisy” data that many organisations have built up over time. These concepts sit at the heart of everything we do and should form the solid foundations from which your data products can be built.

Data Journey

As you seek to progress from knowledge to insight and awareness, the capabilities must evolve to meet your ambitions. The component parts of a data platform can be seen below.

Now consider this: when we attempt to build a house (data platform) we don’t just start laying bricks (ingesting data) – we need to know the measurements of the rooms (data subject areas), the layout of them (the data model(s)), and there needs to be adherence to building regulations (governance). This is how you design a data platform which delivers on your ambitions.

By 2025, cloud-native platforms will serve as the foundation for more than 95% of new digital initiatives — up from less than 40% in 2021.

Source: Gartner

The reason Oakland, and the rest of the planet, it seems, are so focused on building data platforms in the cloud is due to the many benefits provided over the more traditional, on-premise alternatives, particularly in the following areas:

- Reduced TCO: The overall cost of ownership for cloud data platform deployment and operation are, in our experience, considerably lower than most traditional on-premise implementations.

- Service resiliency and management: Managing a cloud data platform requires far less effort and complexity to meet spikes and rising demand while keeping the lights on.

- Speed and service agility: Our clients have experienced a considerable reduction in delivery timescales since using our cloud-based platform delivery process, leveraging our reusable cloud blueprint architectures and components over the years.

- Business model transformation/optimisation: Speed, cost and scale are some of the more apparent benefits, but our advice is not to ‘lift and shift’ what you’ve already been doing in your legacy data landscape and dump it on the cloud. Instead, consider the broader range of benefits the cloud affords.

- Cloud-native platforms assemble, develop, integrate and operate solutions that use the inherent capabilities of cloud computing, accelerating their transformation by making IT a core component of their strategies.

- Legacy application backlogs can be addressed and enhanced with modern technologies offered through cloud-native platforms, enabling organisations to react quickly, and thus be more competitive.

- The managed aspects of cloud-native platforms help shift IT resources towards value-added outcomes by reducing the infrastructure burden.

Part 2: Why do you need a Data Platform?

Before discussing the challenges and process of launching a data platform, let’s consider why you actually need it.

At its simplest you need a ‘platform’ to help your audience find its data and the data find its audience.

Most organisations that have a desire to get more from their data will at some point require a ‘data platform’ of some sort. This is driven by the need to solve these common challenges:

- Disparate ways of working or engaging, leading to additional costs and timeframes in delivery of solutions

- Difficult to access the data that is often spread across multiple systems and needs ‘stitching together’ to yield meaningful results

- Systems are often either too complex or complicated by legacy design meaning that manually ‘stitching together’ isn’t viable

- Confusing and cumbersome processes to engage IT and technology solutions

- Inability to influence the design and service architecture driving poor customer and colleague experience

- Lack of trust across data outputs – “my dashboard is right, yours is wrong”

- Skills and knowledge leading to increased technical and resource costs

- Data is unstructured, not commonly understood and then misused

- The need to centralise ‘data platform’ capabilities where the business has ‘bought the next shiny solution’

To survive and evolve in this technology-led world, you need to be able to change and adapt at a pace like never before, and this is relentless. You need to be all over your insights, you need to be able to get to them quickly, and you need to be able to present them in the right way.

A data platform fundamentally enables you to surface those insights and make the right decisions to transform your business. Your data is the raw material you need to introduce new services and products, wow your customers, and differentiate you from your competitors.

The modern enterprise data platform will allow you to…

- Improve data governance – Done right we feel the modern data platform should reduce the data governance debt

- Accelerate speed to value – access to accurate and timely data

- Simplify your data estate, enabling more people to get access to it (democratise some may say!)

- Centralise and standardise a very complex and disparate set of data sources

- Add the governance needed to ensure the trust and accuracy and the technology handles the timeliness and completeness

- Reduce the growing cost to support IT systems, replacing with resilient, future-proof, flexible solutions

- Improve ‘developer’ experience, delivering compliance in line with industry good practice

- Leveraging the data for future change

- Simplify reporting process ensuring your data products are easy to create

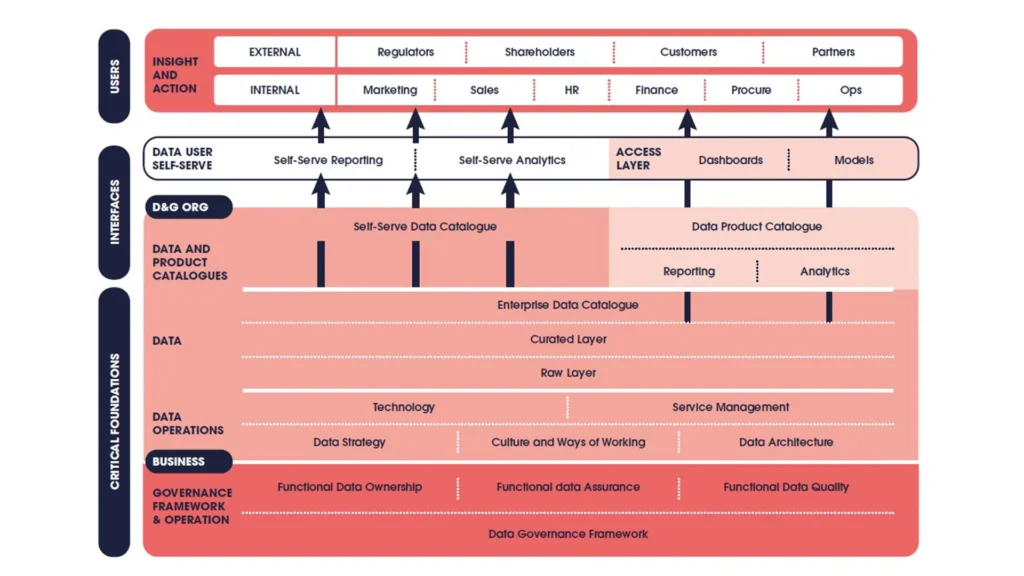

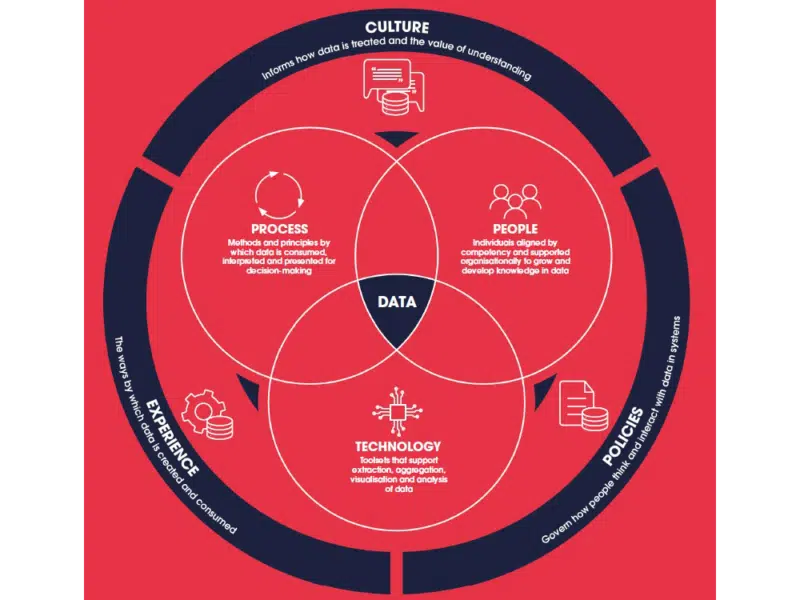

The diagram above represents many data and analytics capabilities coming together to unlock value from data assets in the organisation. A data platform alone does not create value but requires an intersection of process, people, and technology conceived around the platform to provide its actual value. Foundational elements of data ownership in the business set a baseline for platform success. Marrying up good governance with a strong data platform enables the data backbone for an organisation. Finally, building processes and organisational structures to deliver compelling data products to users is the realisation of what the platform aims to provide. Bringing these together is the basis for creating value from the data held within your organisation.

However, don’t believe all you read either – data platforms are not the holy grail to answer all your problems in one go.

For example: “If you ingest all of your data into a data platform technology (Databricks, Synapse, Snowflake etc) then you have a data platform” – not true!

Only when there is sufficient governance as well as standardisation, consolidation and conformity of the data to enterprise architectural standards, will the desired benefits come to fruition.

When considering your data platform, we recognise that most businesses operate from a ‘Brownfield’ state. They often already possess some of the resources and assets they need to implement a data platform. Your business case, therefore, needs to identify what you can reuse – be that people, processes, technology or partners.

But beware, even with these internal capabilities, many in-house teams struggle because they:

- Overlook the connecting ‘glue’ that pulls people, processes and technologies together

- Struggle to unlock the core data that cuts across the business and data landscape

- Try to adopt first principles instead of using established blueprints to accelerate and streamline the development process

You can learn from these observations by asking the following questions during your business case planning phase:

- What are some known data platform ‘prefabrications’ that will help cut development time and costs, whilst still delivering what we need in accordance with company data architecture, security and other related policies?

- Which business outcomes and functions are best served by the data platform, and what data will be required to meet those needs?

- What has to change for alignment across the business, data and technology communities critical to the delivery and ongoing success of the data platform?

Part 3: So you’ve decided to press ahead – what are the challenges you may face?

Before we share our best thinking around data platform delivery, it’s worth shining a light on some of the challenges you can expect along the way so you can be well prepared. Introducing a modern data platform to the enterprise is not easy.

Challenge 1: Excessive Tech-Centric Focus

It’s easy to think of your data platform initiative as a technical project; after all, you’ll soon be designing, launching and modifying an expensive chunk of technology real estate.

But think back to the failed data management initiatives you’ve observed in past organisations – what did they have in common?

Chances are, they got bogged down in the tech at the expense of the business strategy.

Technical teams often prefer to solve technical problems rather than get involved in the messy business of persuading people with different objectives to collaborate.

The big risk in being overly tech-focused is that if your data platform does not meet user needs (such as data availability and data platform usability) they won’t use it. You will achieve minimal adoption and the platform will fail to deliver tangible business outcomes.

Your data platform aspirations should therefore form the ‘pointy end’ of a data strategy – it’s where the rubber hits the roadmap of your digital transformation.

Whenever you feel the narrative swinging too far over to the tech, bring it back with questions such as:

- What does this tech mean to our business model and value proposition?

- How will the technical direction impact our strategic goals?

- How will the tech impact the customer experience?

Without this, you run the risk of low adoption, low involvement from business users, and ultimately low value delivered, if any.

Challenge 2: Departmental Data Silos

In an attempt to solve a tactical or near-term challenge, departments or cross-business functions can often be swayed by the allure of a solution vendor’s shiny offering. The department then commissions a localised solution that seemingly fits their needs but doesn’t take stock of the wider data strategy or needs of the business.

Other teams then struggle to extract this new data, particularly if the department has used off-the-shelf solutions.

The result is an ever-increasing technical burden that becomes difficult to unravel and migrate in the future.

Challenge 3: Poor Quality Data

One of the most common issues we have to deal with is poor quality data. Your data platform will only deliver your business goals if the data ingested is of a high enough quality, otherwise your platform could sink without a trace.

Challenge 4: Lack of Data Governance

Data governance is the specification of decision rights and an accountability framework to ensure the appropriate behaviour in the valuation, creation, consumption and control of data and analytics.

Source: Gartner

The demand for data governance originally emerged from the shift toward more robust regulatory controls in the banking and insurance sectors.

Today, data governance is pervasive across all industries; you can now even buy data governance platforms! Yet many data platform initiatives stutter or fail when data governance is immature or lacks key components suited to data platform strategy and management.

When data governance is missing from your data platform initiative, you create a situation where:

- Business and technical users lose trust in the data

- Designing and maintaining the platform takes far longer

- Frustrating ‘Turf wars’ over data ownership erupt or remain unresolved

To find out more about data governance, check out our guide: ‘How to launch a Data Governance Initiative by Stealth’.

Challenge 5: Failing to consider the complexity and cost implications of the legacy data landscape

Your data platform is not an island; it needs careful integration with existing systems and processes.

At Oakland, we’ve been around a long time (three decades and counting), and one of the recurring trends we’ve seen is the case of the ‘overoptimistic’ target vendor.

Despite the glossy marketing blurb, no data platform is a true plug and play solution. The amount of times we have heard vendors describe how their solution seamlessly integrates with existing tech but then can’t explain in any detail how.

Many aspects of vendor-lock are inevitable and using a vendors portfolio of cloud-native services increases lock-in although access to integrated services and increased discounts can be a plus. To prevent lock-in, (eg open source software solutions) make decisions on a case by case basis and vet the total cost of ownership (TCO of solutions intended).

Creating a new data platform into any enterprise requires a careful analysis of what approaches have gone before and now require direct integration with your new data architecture. (Overlooking the need for a robust data architecture is something we’ll cover in another guide).

We’ve parachuted into several data platform recoveries where the complexity of integration was overlooked and soon became the mother of all obstacles to going live.

In short, don’t overlook the essential brownfield discovery tasks that some vendors like to gloss over in their haste to get you over the finishing line.

Challenge 6: Not prioritising requirements

“So, what are we building again?” can become a common challenge as you get deeper into data platform delivery.

The problem is the modern data platform can support a plethora of use cases including:

- BI/MI capabilities

- Self-service reporting

- Integration

- Data catalogue

- Single source of truth e.g. single voice of the customer, employee, product, service or asset

- Executive or regulatory reporting

- Advanced analytics and AI

- Real time analytics

- Assessment management

- Adjunct to a core system

The list goes on and on…

It’s easy for your data platform to lack clarity and prioritisation around its core function, especially as different groups begin to see your platform as a data ‘dumping ground’. The practice of “let’s keep it in case we need it” can lead to a bloated data platform, further complicating the task of getting insights out of your data.

To prevent a toxic swamp of data, we prefer to phase the delivery of a data platform with a regular cycle of ‘Lighthouse Projects’ that solve burning issues within the business but still align to an overarching data strategy and architecture with a clear transition to an enterprise solution.

Start small, think big, and act fast.

You get to demonstrate the benefits of each release, garnering support as you deliver each successful project, helping to justify the investment of further initiatives.

Challenge 7: Creating the case for change

Creating a compelling business case for a modern data platform can be challenging for many organisations, particularly when faced with a legacy of delivery struggles.

As you’ll read later in this guide, there are multiple benefits and use cases for deploying the next generation of data platforms that will appeal to a range of leadership sponsors.

We’ve found that the key is to deliver smaller, faster pilot projects that deliver rapid and sustained gains without over-investment and risk, whilst building data capabilities at the same time.

What does the business really want to know? Where do they need insights?

By focusing on delivering the right data at the right time to support specific business outcomes, you’ll quickly gain support for the future of your data platform.

Over the years we’ve also assembled a portfolio of transformation stories that highlight the impact of data platform introduction, so feel free to reach out and learn more.

Part 4: Assembling your Data Platform

No guide to a data platform could be complete without a framework diagram, so here we go.

This is Oakland’s Enterprise Data Platform blueprint

At this stage we would assume the requirements for your data platform are now known and documented. So, where do we go from here… (consider this is from a technical value)

We believe there is a logical order to tackle your data platform delivery. Yes, every organisation is different, every challenge unique, all frought with idiosyncrasies and quirks, but all things considered, our 6 step approach provides a pragmatic guide from which you can start…

1. Catalogue sources

we know your data is available in your technical estate. A key activity to building a data platform is to gather a list of those data sources and what type of process they support and information they store.

2. Design output model

to get good value from a data platform the information in it must be presented in a way which can meet the needs of the platform – you do this by creating a data model. This way you can conform your source data to the data model providing a reliable confident information repository.

3. Define your data governance needs (lightweight vs hardcore)

like all strategies/methodologies and frameworks, data governance must be applied contextually. Failing to build data governance as a foundational component of the data platform effectively means you have an ungoverned data, source which damages the validity of data reducing it as a trustworthy source. Pull together your data owners, stewards, processes and standards. Work out what business change is needed to support your data governance approach. Decide on where you are going to start and what your governance road map looks like – remember don’t attempt to boil the ocean; data governance takes time, effort and iteration.

4. Consider tools, technology, and resources

(let’s get our implementation strategy nailed). Often data platforms lose their way because of technology, either because a technology dictates a way of working or the technology doesn’t do what you need without complex work arounds. We have found componentisation to offer a good way to navigate the technology minefield enabling compartmentation of capability and managed interfaces to ensure successful interoperation. Tools, technology and resource mean capability, capacity and supportability all critical elements of successful delivery and operation.

5. Build your capabilities

whilst iterating your use case achievements. Through the combined activities of understanding your sources, developing your data model, aligning data governance and establishing a technology/ way of working strategy, building the platform becomes both more predictable around co ordination of delivery but also from the perspective of what is delivered is of value and quality.

6. Realise value

with a repository of data presented in a structure (model), where the quality is known because of aligned data governance, you are enabled to provide multiple uses from this one source. Confident that your data is valid and consistent across all use cases.

Building a data platform will require you to assemble a varied cross-section of skills, technologies and leadership so the below highlights how we approach data platform service delivery.

When considering building an internal team, this can also be a useful cross-reference as you develop your approach.

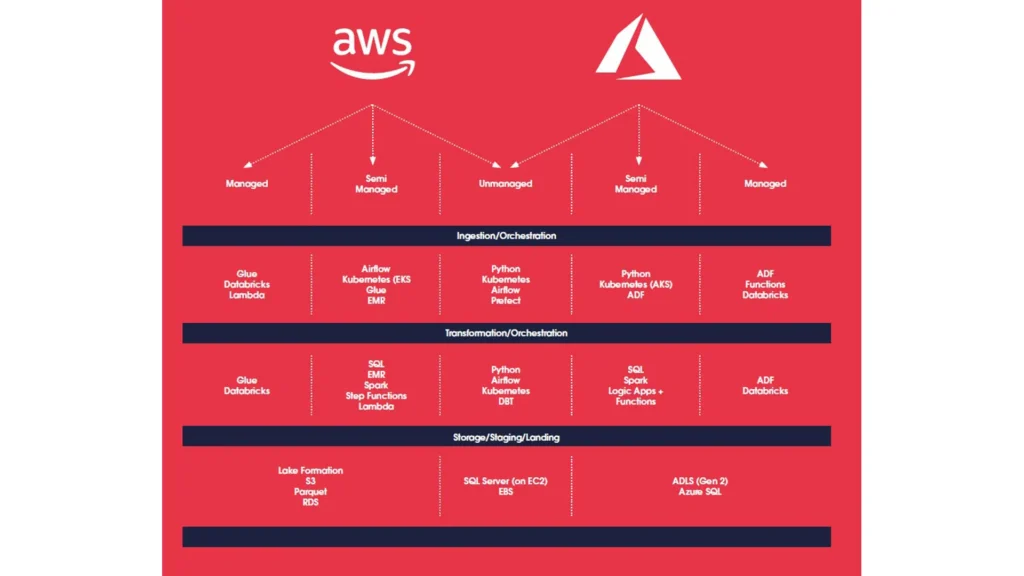

As well as the above step-by-step guide, it can often help to leverage delivery blueprints that will accelerate the data platform delivery, inject some much-needed governance, and help accelerate any aspirations for a digital strategy in a shortened timeframe.

Data platform standard patterns are a simple way to speed up the overall delivery process and inject some repeatable architectures and delivery processes into your organisation.

Having delivered so many data platform initiatives, we’ve adopted a pragmatic view on the technologies and practices that consistently deliver rapid results and those that don’t.

We have developed standard patterns to integrate across many core architectures. As an example, we utilise patterns from within existing ecosystems as illustrated on the next page.

Oakland has standard patterns to integrate across many core architectures:

Part 5: How Oakland can help you

We relish the opportunity to help deliver data platform initiatives for new and existing clients.

We have remained technology agnostic for over 35 years and are able to develop the right solution regardless of your existing technology estate.

Those 35+ years operating across tech, data, people and process, have taught us how to navigate and bring together those functions in a more coherent way. BUT, not one size fits all – there is no silver bullet. Our playbook is tech, people and process (full E2E to get the change to stick). We offer an agile, tailored and flexible approach which is able to move at pace and change direction when required.

While a data platform is the basis of the technology investment you will be making for data in your organisation, it alone will not move the organisation forward. You must also invest in building capabilities in the data and analytics space that can leverage the platform to drive value. Your capability solution should give equal priority to the technology stack that you are developing, the data product management process, or the organisation of data stewards across functions. This will form the basis of lasting value delivery for the organisation, as you

can move forward with changes in technology more easily in the future while continuing to answer the compelling questions that are demanded of data within the organisation.

We help deliver the standard technical and delivery services you would expect from a premier partner:

- Assessing and developing operating models

- Creating a cloud data platform from scratch

- Data modelling / data architecture / data solution architecture

- Developing data functions

- Re-engineering and improving business processes reliant on the platform

- Developing the supporting ‘non-technical’ infrastructure to ensure long term success

We’ve been around long enough to know that delivering a modern data platform (with all the machine learning, automation and data science capabilities you demand) is pointless if your

organisation isn’t ready to embrace this level of change.

We’re not a mega-firm with bus loads of consultants and offshore delivery staff, because that model, in our experience, quickly becomes excessive and self-defeating. You need to deliver a data platform that fits your existing capabilities once the experts leave the building.

Delivery success lies in ownership engagement and transition

Our approach is to actively engage and transition the business/tech/data communities to take ownership of the platform at all levels through the following:

- Coaching client-side data and product teams to put in place the right operating model

- Enabling data and product teams with the right technology and development methodology to scale future product team resourcing and data processing demands

- Assuring the launch and long-term future of the data platform through data governance

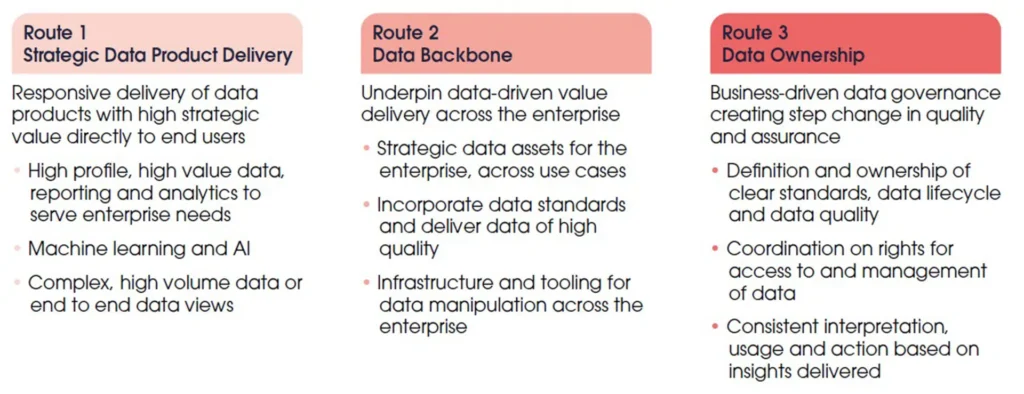

Remember, we focus on value creation through three key delivery routes:

Role 1: Strategic Data Product Delivery

Responsive delivery of data products with high strategic value directly to end users

- High profile, high value data, reporting and analytics to serve enterprise needs

- Machine learning and AI

- Complex, high volume data or end to end data views

Role 2: Data Backbone

Underpin data-driven value delivery across the enterprise

- Strategic data assets for the enterprise, across use cases

- Incorporate data standards and deliver data of high quality

- Infrastructure and tooling for data manipulation across the enterprise

Role 3: Data Ownership

Business-driven data governance creating step change in quality and assurance

- Definition and ownership of clear standards, data lifecycle and data quality

- Coordination on rights for access to and management of data

- Consistent interpretation, usage and action based on insights delivered

Our clients feel comfortable and reassured enough to sustain the data platform once our partner role comes to a close.

Building out a data platform for the first time can be challenging, but so is moving from a first generation to a second generation…

Oakland can help in a number of ways:

- Data Platform Capability Assessment: Have you already got something, but it’s not delivering value?

- Data Platform Accelerator: you want to dip your toe in, don’t want to commit to a multi ££ programme, but need to demonstrate capability/ value quickly

- Strategic Data Platform Delivery Partner: you’re looking to deliver a large enterprise data platform programme and need a partner to design, deliver and run the programme

- Data Architecture Assurance: Is the enterprise data architecture fit for purpose? Do you need an independent view to confirm your plans and designs?

Part 6: More real-world examples

This guide has provided conceptual guidance and approaches, but there’s nothing better than seeing real world examples of this work in action. Here we explore some of the real-life challenges and positive outcomes our clients experienced during their data platform journey.

A water utility – Improve trust, insight and efficiency of projects and regulated reporting

Challenges

The existing reporting infrastructure impacted a water utility’s ability to deliver timely reports that met the needs of various finance, projects and regulatory stakeholders.

There were a variety of challenges to address:

Speed and value of insight:

Basic project data analytics and reporting had become slow and laborious, whilst advanced analytics was challenging.

Lack of easy accessibility:

Reporting teams were physically unable to reach many datasets. This lack of reach resulted in wasted resources, manually linking and merging disparate data to ensure each report was fit for purpose.

Portfolio complexity:

Legacy reporting practices had made it harder to manage the project portfolio and ensure project and regulatory milestones would be met, or at least flagged as a warning if they were drifting off-target.

Demand for standards:

The CIO had expanded a new data infrastructure that meant any new project analytics capabilities would need to conform to the revised company standards for data architecture.

Use Case:

They needed a way to maintain reporting quality but using a faster, more scalable and cost-effective system than ever before whilst meeting stringent company standards for architecture, performance and regulatory compliance.

Business Outcomes

There were many outcomes, but here are some of the most impactful:

- Reduced risk: They can now rapidly pinpoint potential capital projects failure points in a way it couldn’t achieve before.

- Innovation use case: The project delivered a defensible use case for implementing data solutions according to the corporate data architecture standard.

- Operational effectiveness: Project managers can run the business and projects more effectively than previously.

- Greater trust: The data platform has improved the confidence and relationship between key stakeholders and regulators.

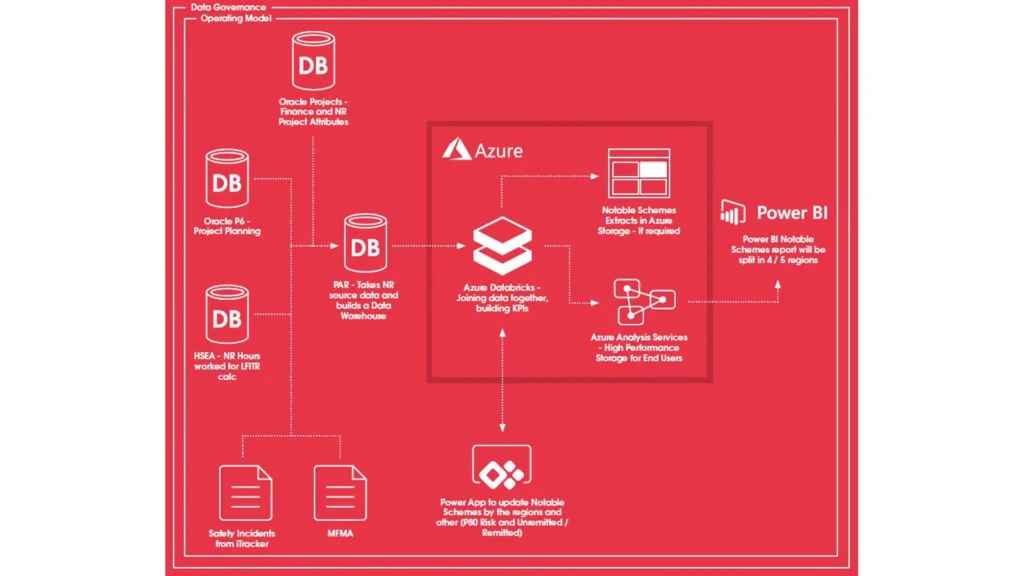

Network Rail – Intelligent forecasting and analytics for complex capital projects

Challenges

Rail projects need to be delivered faster, at less cost and with greater value to the taxpayer than ever before.

In a bid to ‘put passengers first’ and create a more customer-focused, service-driven organisation, Network Rail devolved the delivery of capital projects to the regional level, which put pressure on the existing capital projects reporting platform:

Legacy obsolescence:

The existing business intelligence and forecasting technology used for Regional Capital Delivery reporting became dated and out of touch with the modern demands of complex engineering and infrastructure programs.

Lack of unified data strategy:

Whilst each region had its own reporting needs, Network Rail required coordinated governance of the data definitions, data structures and dictionaries across the group. Regions needed local autonomy but within the constraints of a common data strategy.

A shift in reporting models:

Network Rail had transformed its process for major engineering projects, from the GRIP (Governance for Railway Investment Projects) process to the new PACE (Project Acceleration in a Controlled Environment) approach. This step-change in reporting policy meant added pressure for the legacy data platform to service new capabilities.

Data Platform Use Case:

Develop an agile data platform supporting state-of-the-art intelligent forecasting and reporting, capable of rapidly adapting to the complex and changing needs of capital projects reporting across the entire business.

Data Platform Design:

Network Rail was keen to mature towards open technology and move away from ‘lock-ins’ with a particular vendor or supplier. As a result, Oakland opted for standard ‘off-the-shelf’ tools readily available in the marketplace, such as Microsoft Azure and DataBricks.

Business Outcomes

Total evolution in capital projects decision support within Network Rail. Here are just some of the benefits this project has delivered:

- Smarter decisions: Machine learning and advanced analytics tools were deployed, offering greater forecasting and decision analytics than previously possible, potentially saving millions of pounds. These insights are now just another information source that users can incorporate into their reports.

- Faster decisions: Reporting processes have been streamlined, resulting in faster, better quality decisions without the manual cost and ‘Excel-hell’ of the previous system.

- Capital savings: Intelligent project analytics has delivered far greater transparency into projects that have misallocated contingencies and can be flushed out of the portfolio roadmap, leading to the potential for substantial savings each year.

- Self-service: Each region can now ‘do their own thing’ and leverage multiple reporting levels, including static reports and custom reports (on static models). Users can execute ‘build your own models’, featuring data pipelines with curated data, giving them full customisation of data processing.

Income Analytics – Creating a catalyst for growth

Challenges

Income Analytics was established in 2019 by three entrepreneurs with a history of working in global real estate investment markets.

Their goal was to leverage twenty years of planning and development expertise to create an online platform that would enable real estate professionals, investors, owners and lenders to make better informed data-driven letting, investment and lending decisions.

The problem was their legacy (on-premise) data platform was creaking at the seams:

Outdated data processing:

Getting quality data into the system was time-consuming and clunky. Staff had to manually input client portfolio information and asset data, then integrate with the latest information from Dun & Bradstreet.

Ineffective customer experience:

Clients got weekly updates, so manual data validation was slow and laborious. When source data changed, the Income Analytics team had to email details of the change to clients manually.

Lack of visibility:

Many of Income Analytics’ clients had hundreds of assets, so they needed to quickly and visually access their information, putting added pressure on a cumbersome data operation behind the scenes.

Reduced morale

The laborious method of inputting data led to an overworked team which meant Income Analytics struggled to concentrate on growing the business.

Use Case:

The Income Analytics leadership team asked Oakland to re-architect their data platform by leveraging the Oakland data strategy, cloud engineering and process transformation expertise.

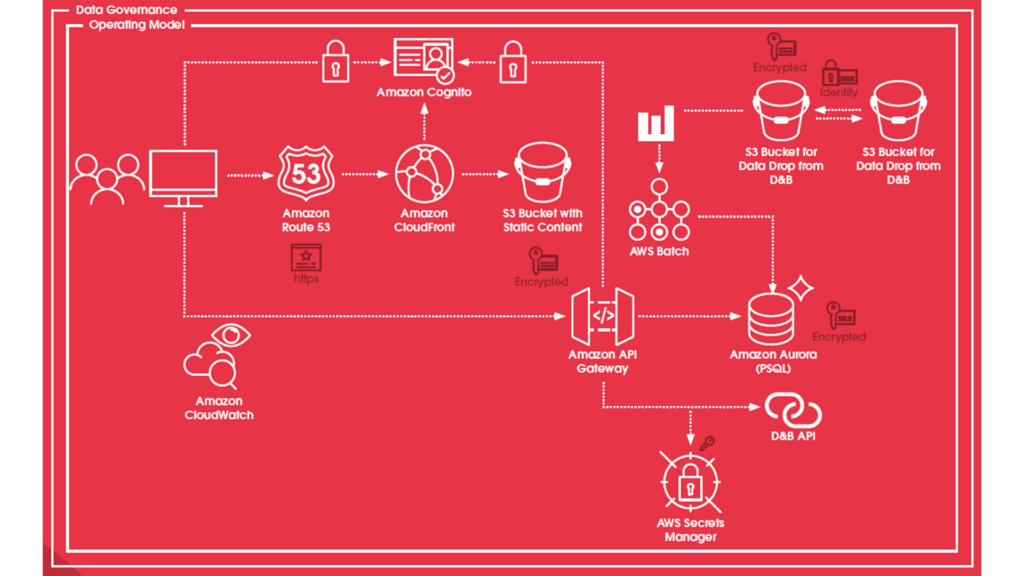

Data Platform Design:

We started with a small proof of concept that began transforming their complex legacy system into a modern application, leveraging a cloud architecture to create a platform for continued innovations such as machine learning and predictive analytics.

The following diagram explains our final design:

Business Outcomes:

Let’s explore some of the business outcomes from this design.

Faster automation drives more intelligent decision making

The Oakland-built data platform now provides real-time, continuous monitoring of various Dun and Bradstreet data sources such as:

- Tenant Data

- Company Data

- Historical Scores

- Parent Data

- Financial Data

With seamless integration and intelligent workflows, the data platform automatically feeds the source datasets directly into the Income Analytics service offering, a game-changer for a market that thrives on making quick decisions.

The result was a highly differentiated offering that delighted their customers and a great example of leveraging data platforms to create a competitive advantage, not just operational cost savings.

Delivering rapid and incremental commercial value

Building in the cloud meant Oakland could design a solution roadmap that ensured the business could benefit commercially from each significant release instead of waiting long periods to recoup their investment.

Leveraging the speed and agility of the cloud (with our cloud architecture blueprints) meant we could consistently hit operational milestones faster than anything we’ve experienced in a purely on-premise environment.

Driving down costs

Oakland designed a solution to leverage AWS serverless technology. This decision helped speed up the development process (and reduce technology

costs for the client).

This decision means the client only paid for the limited usage required during the build phase and now only pays for whatever resources they consume.

Part 7: Final words of advice

Hopefully, this guide has answered many of the questions you or your team have been wrestling with as you consider the many options associated with data platform implementation.

Finally, we’ve provided some final pointers to consider before moving forward on your data platform journey.

- Platforms are ecosystems: In effect, you’re building a series of integrated solutions that constitutes a ‘platform’ when complete. If you refer to our data platform case studies, you’ll notice we constructed each data platform with various underpinning applications, all requiring seamless integration.

- Mitigate hidden costs: When building a cloudbased data platform, the allure of reduced implementation and ownership costs is compelling. However, many unexpected costs can creep in and derail the success of your project. One of the ways we’ve found to reduce these costs at Oakland is to rely on our standard blueprints for data platform delivery and architecture. Don’t pay for consultancies to ‘learn the ropes’ of data platform implementation with your time and budget.

- Reimagine the customer and employee experience: Don’t just consider cost and speed improvements; think about how the cloud can transform every facet of the customer experience with your core services through a modern data platform.

- Go lean, go fast: Plan with the end in mind, but build an early prototype to validate expectations and offer something to the business to foster a clear vision and buy-in. Business models can adapt and shift quickly, so rapid delivery is vital for staying aligned.

- Success lies in transition: Building a data platform is only one part of the puzzle. You need the business to engage and buy into the platform’s goals. Think beyond technical transition. The success of your data platform stems from culture and behaviour change delivered through programs of coaching and training.

- Align with corporate standards: Don’t fly solo. Build a solution that meets the varied security, governance, quality and architectural standards of each governing body within your organisation because this will deliver far greater business value over time.

- Forget people at your peril: Don’t forget that successful adoption of your data platform will only happen if you’ve aligned the people and process elements. Using the platform and using it in the right way will often require a shift in mindset (get ready for a cultural change), structure (who’s going to own the DevOps team going forward!) and the introduction of new processes not only to manage the operation of the platform, but also in educating the business in how to make best use of the data products it produces.

As we always say there are no silver bullets and building a data platform can be a hugely challenging endeavour, but when you get it right the results are truly transformational.