What is data pipelining, and why does it matter?

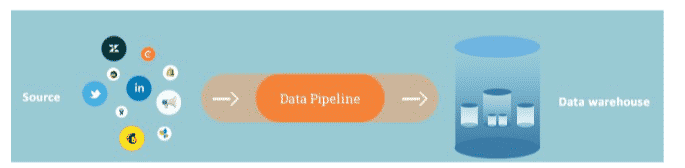

Data pipelining, or orchestration is an everyday activity performed by companies to move their data from one place, likely the primary storage location, to another, such as a cloud-based data lake, often including transformations during this process. Whilst this is a standard operational activity, the number of pipelines utilised by a single company has increased due to the more widespread usage of data, mainly for reporting, analytics, and machine learning. This is because the easiest way to perform these tasks is by having previously disparate data sources in a clean format, all in one place. As such, while the noise around ML and AI grows, the importance of data pipelines that feed these processes, and normal business operations, also increases.

So surely this problem has been solved?

Given moving data around is such a common activity, you might assume that there is an agreed set of tools and standards. At Oakland we’ve learnt never to assume, and while the formats in which you move data around are now kind of set. The tooling to do so is constantly changing, largely driven by the increased usage of cloud services and the move towards “big data”. Common tools these days include Apache Airflow, Azure Data Factory, AWS Glue, Talend, and Informatica, with both open source, bring your own resource, and managed service mindsets catered for. As you would expect, all these tools have things for and against them, and as with every technology solution there is no one size fits all. However, many of these tools have a common set of issues that we believe are overcome by something developed more recently, Prefect.

What is Prefect?

Prefect is an open-source (free to use and modify) Data Orchestration library, which can also be utilised as a paid platform. You would use it instead of the various tools mentioned in the previous section, AWS Glue, Azure Data Factory, Talend, etc, that co-ordinate both the movement and transformation of data, often running tasks in parallel where possible.

It can handle orchestrating batch movements of large amounts of data, including transformation and cleaning operations, using parallel running tasks if required.

So why should you use it?

A mature market like data orchestration software requires something special to move a company from its current tooling. However, we believe there are several core features that showcase the benefit of using Prefect:

Usability:

- Prefect’s pipelines can be entirely written in Python, one of main languages used for Data Engineering and Data Science. This allows more engineers to be able to easily develop complex and dynamic pipelines, utilising standard version controlling tools, such as Git, for added safety.

- Being just a Python library at its heart, it only takes a few minutes to set up and try. It makes it much easier to deploy and productionise as it doesn’t need dependencies like databases and message queues to operate, which other data orchestration tools may need, like Apache Airflow.

- The managed service, Prefect Cloud, provides a simple to use UI that doesn’t have direct contact with any of data involved within your pipelines. This means that data security is maintained without building a custom UI on the same network as the compute source processing the data.

Efficiency:

- Prefect doesn’t require data to be serialised between every transformation task, even if the data is in gigabytes in size. This a BIG deal – as for most data pipelines 90% of the run time will be in de-serialising and serialising the data from disk to memory and back again, so you can potentially cut run times by a massive amount. As far as we’re aware, no other Data Orchestration software does this as well, or at the scale Prefect does.

Scalability:

- Prefect runs on Dask, a big data in-memory processing library, allowing it to scale from a single pipeline task to thousands, handling data ranging from Megabytes to Terabytes. Meaning you can efficiently perform your orchestration on the same server as your data processing, saving server costs and reducing network complexity.

Flexibility:

- Prefect can orchestrate almost anything that can be written in Python; this includes machine learning tasks, API calls, reading and writing of hundreds of data formats like Excel, CSV, and JSON, the list goes on. As such, you will be hard-pressed to find tasks that can’t be orchestrated via Prefect.

Maintainability:

- As a code-based solution, all the pipeline logic is available in a clear, documentable format, unlike with low / no-code solutions, with the ability to quickly understand the differences between pipelines in different environments. This also allows for sharing functions between pipelines for similar tasks, saving you time when maintaining multiple pipelines.

And why shouldn’t you?

Prefect won’t be for everyone, and we wouldn’t recommend it to data teams for the following scenarios:

Streaming:

- Prefect is only designed for ad-hoc and batch data pipelines, so any complex data solution that needs to be updated every minute or less should look at streaming data software, like Apache Kafka or AWS Kinesis instead.

No code solution:

- While Prefect has a User Interface for monitoring pipelines, it’s pipelines are designed to be written in Python. Those who do not want to build and maintain Python code should look at low-code / no-code solutions like Azure Data Factory, which we have used successfully for various clients.

Very simple operations

- If you are just moving data from one database to another in a linear path without transformations then Prefect, or any data orchestration software, may not be needed.

In conclusion….

Prefect is a great new tool that can help consolidate data pipelining/orchestration activities by utilising a very common language and with the ability to scale out efficiently. This blog only covers a fraction of Prefect’s features. For more complex examples of how we’ve integrated Prefect into our projects, please get in touch by emailing hello@theoaklandgroup.co.uk or calling 0113 234 1944

Jake Watson is a Senior Data Engineer