In recent years, many organisations have expressed a need to be more data driven. This comes from a desire to capitalise on more digitised processes and customer interactions, and the insights that might be found within the data that is created. As executives have pushed for more data and insight, many organisations have realised that they were woefully unprepared to deliver. This has created a spate of data transformation programmes that have launched, only to struggle to gain traction. Technology is most often pointed at as the cause of failure; however, as you look closer, many of these programmes fall into one of two traps.

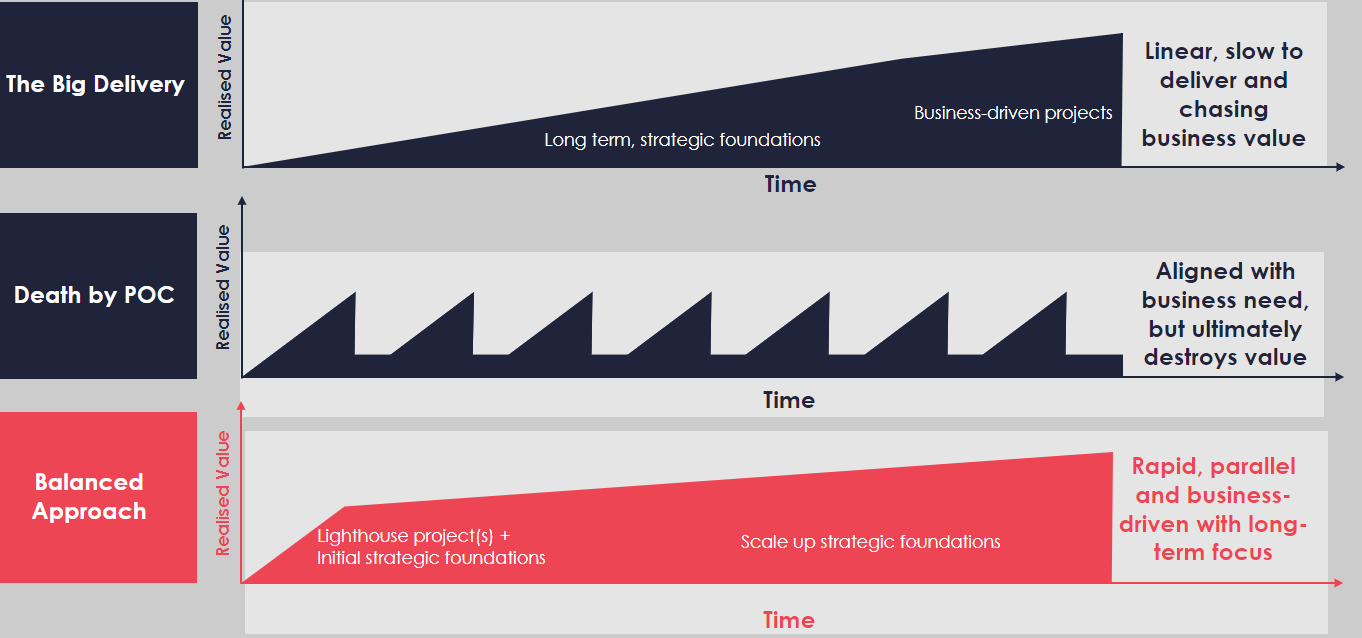

- The Big Delivery. This trap is common. The programme spends months or years locked away developing the ultimate data platform and supporting data management tools. This is usually done with minimal stakeholder engagement, or through a few stakeholders, and has a big reveal that does little to solve the challenges of users but is very pleasing for technical teams. Meanwhile, end users and leadership are left with little and revert to old behaviours to gain insight (usually in the form of a complicated spreadsheet).

- Death by POC. This trap is emerging more frequently. Programmes will actively engage end users to create a massive list of use cases for delivery. These will then be built as proof of concept (POC) to show value quickly. The trap comes where either the funding doesn’t come to productionise the POC, or features keep getting added on top of something that was never meant to grow. This creates a challenge for data teams that spend most of their time trying to keep functionality from falling over and have limited technology to support them.

So, how do you avoid falling into one of these two traps?

First, you need to see the value within each of the traps. The Big Delivery is trying to build a foundation for effective data management. This is necessary for sustainability, but this trap does so at the expense of delivering value for the organisation. Death by POC focuses heavily on rapid value creation and stakeholder engagement, which is essential to maintaining alignment with business strategy. Unfortunately, this comes at the expense of data and analytics resources, who are rapidly burned out trying to avoid catastrophe in the tooling.

Second, in understanding the value, you need to look at approaching delivery differently. A hybrid approach that combines foundation development and use case delivery is one that has proven to be successful. This runs core work on platform, governance, and operating model alongside delivering business use cases. The use cases delivered are sometimes smaller in scope, but, are delivered with a full slate of capability across process, people, technology, and data so it can stand alone without intensive management. We call this a lighthouse project.

Finally, you need to identify and plan for transition states in your programme. Organisation patience for any transformation runs this, even when you are balancing foundation development with use case delivery, so identifying the points at which the programme can accumulate (and celebrate) value is key to maintain stakeholder engagement. These might be in line with delivering your lighthouse projects, with each shining the way to the next, or landing of foundational capability in anticipation of emergent use cases. However, you plot these transition states, think of them as points where you could stop the programme, and everyone would see what had been delivered as a success.

If you are able balance the lighthouse and foundational, your value realisation will scale much more effectively than the Big Delivery or Death by POC.

Moving to this way of thinking doesn’t come overnight and requires quite a bit of planning. We would recommend development of a data strategy as a key point to identify capability to be developed, use cases to be delivered, and appropriate transition points along the way. Typically, the process for building and implementing a strategy follows these steps:

- Discover – clearly identify the value of data to the organisation, the use cases creating pain, and where you need to create foundational capability. Set an attainable vision from here.

- Define – sketch out the core changes you are going to make, identify transition states to get to those changes, and prioritise the use cases you plan on delivering.

- Plan – scope out lighthouse projects and then plan a roadmap of parallel foundation project and lighthouse project delivery. Make certain your transition states are clear and go seek funding.

- Execute/Adapt – deliver to your plan but be prepared that everything won’t go your way. Adapting and pivoting will be key to success.

The Oakland Group Data Strategy Guide has some great tips and an approach that can get you started on this path. Here at Oakland we have many years of experience helping a wide variety of clients with these challenges. You could say we’ve been there and done that so we know what works and crucially what doesn’t. If you’d like to find out more then please email jeff.gilley@theoaklandgroup.co.uk.

Author: Jeff Gilley

Jeff Gilley of Oakland

Jeff is a Principal Consultant here at Oakland, focused on helping organisations with data strategy, data governance, and data architecture. Over the past 23 years, Jeff has worked with some of the largest organisations in the world on transforming how they leverage data to improve customer experience, drive efficiencies, and make better decisions.